Technology, Ethics, and Generative AI

Artificial Intelligence (AI) has burst into the mainstream public awareness. The launch of OpenAI’s ChatGPT, which brought the terminology ‘generative AI’, or GenAI to the mainstream vernacular. We knew GenAI innovation was coming and that it would have major impacts. But 2023 marked significant leaps in GenAI, producing content based on human or data inputs.

For many, GenAI has brought practical, everyday uses. It can boost productivity, provide quick information retrieval, or automate mundane tasks. It can even plan your next vacation itinerary! On the flip side, GenAI may generate inaccurate, biased or unethical outputs. It’s both a technological marvel and an ethical dilemma.

We have all experienced an AI mishap over the years. You may have typed the name of a place into your phone and placed your trust in the navigation app to get you there. In the early days of Google Maps, I was getting directions to the Pentagon and ended up on the wrong side of the Potomac River. I assumed the directions were accurate without verifying, which lead to a confusing and frustrating result. There have also been reports of drivers following GPS systems into dangerous situations. Some have driven into hazards, construction zones, parks or even bodies of water.

This leads to a very important question: how much blind trust should we place in smart technology without checks and balances? Shouldn’t we always provide some human oversight?

Since that fateful crossing of the Potomac, extraordinary advancements have crafted AI into a reliable tool for many uses. Its power has begun to shape industries, economies, and even human interaction. Let’s explore GenAI’s benefits, risks and ethical dilemmas:

Mainstream Adoption of AI

Thanks to modern computing, machines can learn, adapt, multitask, and make autonomous decisions. AI has swiftly become an integral part of enterprises around the world and in sectors like finance, healthcare, creative arts, and public sector. From improving customer experiences and personalizing education, to enhancing healthcare diagnostics and optimizing business operations, the potential might be limitless.

One area of significant advancement is cybersecurity. But there are both advantages and major risks in that arena. Organizations use AI for faster threat detection, behavioral analytics, network security, authentication, and response. AI-enabled tools often provide vast improvements in accuracy, adaptability and efficiency compared to traditional methods, which historically have been manual, time-consuming tasks. GenAI’s ability to continuously learn from past experiences while predicting and anticipating future scenarios will be especially helpful as it evolves.

Appropriate machine-learning capability is crucial when analyzing behavioral activity to reduce false positives and help cybersecurity teams avoid alert fatigue. AI improves network anomaly detection, system integration, and reduction of signal-to-noise ratios.

The financial sector has been at the forefront leveraging AI for security purposes. Faster detection of deceptive activity can have a major impact on a bank’s ability to prevent theft and recover fraudulently transferred funds. Innovation continues, predicting unusual behavior and developing the ability for the AI to take prescribed next steps. You can also automate penetration testing with AI, enabling you to test more regularly to identify gaps and gain speed for resilience during an attack.

GenAI also plays a crucial role in enhancing threat intelligence within cybersecurity applications. AI can rapidly process and analyze vast amounts of data and user activity information to identify patterns indicative of cyber threats. Including diverse data sources like logs, network traffic, and behaviors. By analyzing historical data, AI can help predict potential future threats and vulnerabilities so organizations can implement stronger security measures against emerging threats. AI systems can also continuously learn and adapt to evolving cyber threats and enhance the overall security posture of an organization in real-time.

Veritas is committed to help maximize the productivity potential of GenAI tools within your business, while maintaining critical compliance and security protocols. Our solutions offer advanced features like AI-powered anomaly detection and data classification models. These tools streamline data organization, illuminate dark data, enhance threat detection, and enable both compliance and security. We have even more innovations on the horizon. Unlike others who have hastily adopted AI, we've meticulously considered how "AI assistance" can most benefit our customers. Learn more about our AI-powered data security in this podcast: Unlocking the Future of AI, Security, and Veritas Solutions.

Risks of AI and Cybersecurity

Along with all the beneficial innovation and advancements, cybercriminals are also taking advantage of AI and large language models (LLMs). AI-generated, flawless phishing messages already make cyberattacks harder to detect. Gone are the days of misspellings and grammar errors, as dead giveaways of phishing attacks. Simple GenAI prompts can aid cybercriminals in creating fake phone calls, deepfake photos, videos, and better-quality communications.

Cybercriminals can and will use AI at every phase of an attack’s kill chain. Imagine AI alerting a cybergang when a network breach succeeds. AI can write malware and help spread it once inside the network. AI can then mine enormous amounts of data to identify key assets to steal.

Malicious actors may attempt to manipulate or deceive AI algorithms or even compromise data leveraged to train datasets, called data leakage. Subtle, carefully crafted data inputs can cause AI systems to misclassify or make erroneous decisions. Poisoning — the ability to deceive an AI model — can pose substantial threats to financial, healthcare, and critical infrastructure. Malicious actors can manipulate government agencies at any level, causing societal disruption by manipulating AI to make unethical decisions.

Ethics and Responsibility

Ethics in GenAI extend beyond algorithmic fairness to include privacy, bias, transparency, and accountability concerns. It’s crucial to ensure that GenAI systems prioritize fairness, mitigate bias, and maintain transparency throughout the lifecycle. The intentions behind implementation carry equal weight.

Many organizations tout their use of GenAI. They publicize policies aiming to embed ethical considerations into their technological advancements, promising to prioritize societal good. However, when it comes to the development of GenAI, the ethical lines start to blur.

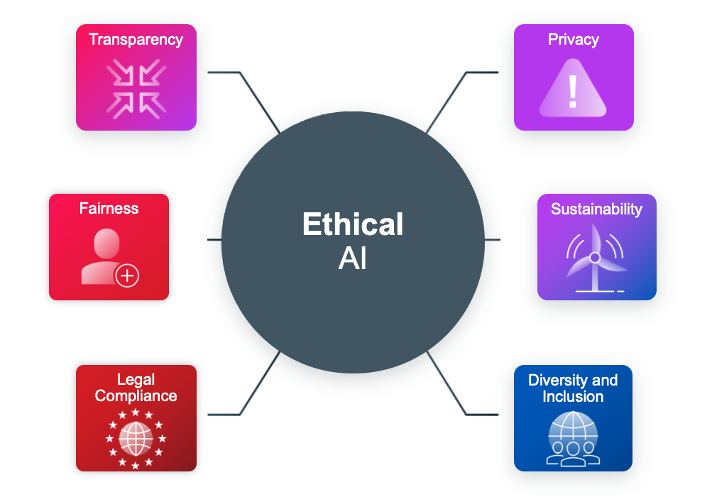

Elements of Ethical AI

Ethical development of GenAI incorporates the following concepts:

- Transparency includes corporate behavior like sharing AI principles, methodologies, and potential risks. Genuine transparency includes acknowledging limitations and learning from unintended consequences.

- Privacy emerges as a linchpin of ethical AI. Data privacy demands attention to the collection and use of personal information. Respecting user privacy, data minimization, and consent become imperative. AI applications must be transparent about how they use data.

- Sustainability refers to the property of conserving resources for the long term. Compute power required to train machine learning models uses a tremendous amount of electricity and other natural resources.

- Diversity and inclusion are critical in AI development and deployment. There are notable examples of why inclusivity should not be disregarded. Responsible AI practices can set benchmarks, inspire trust, and pave the path for sustainable innovation. Done right, AI should make lives better — and make businesses better, too.

- Legal compliance and responsible policies must train employees about the risks of using information. In a real-life example, employees copied corporate trade secrets into public AI models to develop content. This ignorance can put your most valuable information — and your organization — at risk.

- Fairness requires that those who are training machine learning models consider the source of the information. If artists’ works are used without their consent, and profit or progress is made, while failing to acknowledge artists’ work for example, illustrates the concept of fairness.

In the coming months, debate will intensify over what kind and what level of regulations are necessary to guide ethical AI development. Organizations embracing AI must consider ethics as an ongoing commitment. AI requires continuous evaluation, adaptation, and engagement with multiple and diverse stakeholders.

As GenAI systems ingest more data, security becomes integral. Safeguarding against breaches, unauthorized access, or malicious manipulation of AI-driven systems is paramount. You can automate decisions, but human validation should always be in the process, leveraging the core principles. Tech, legal, and ethics leaders need to identify the point at which they incorporate human level inspection. Can you identify where the AI model is collecting the data it’s using? Can you validate that the information it’s using is accurate and gathered legally?

Next Steps

Approaching GenAI proactively with questions to ensure that deployment aligns with ethics, legal concerns, and broader applications encourages responsible development.

Modern data management solutions encompass an array of advanced technologies that guide organizations towards autonomous action and incisive decision-making. To remain competitive, businesses should focus on adopting AI/ML technologies and tools for data management to optimize existing systems. Corporate guidelines around the appropriate use of GenAI and its oversight should be developed and well-communicated. Organizations should also train employees to utilize AI-assisted tools and ensure they’re comfortable with the data environment nuances. Organizations should also proactively build partnerships to strengthen data management capability, especially in cybersecurity and compliance.

Veritas is committed to helping you navigate your AI journey while retaining complete control of your data. The secret to a successful AI strategy starts with enterprise-level secure data management and cyber resilience. Ensure your data is secure, protected and governed through enterprise-grade data management and cyber resilience with Veritas.