How Scalable is Cloud Scale Technology for Cloud Storage?

IT customers today have multiple cloud options available to aid their corporate digital transformation initiative. Cloud architects and business owners are aware of the numerous multi-cloud transition benefits. We often hear of key benefits such as convenience, accessibility, flexibility, and worry-free data growth among others. However, chief among these benefits are overall cost-effectiveness for cloud computing and cloud storage based on elasticity and scalability. It’s fairly straightforward to understand that moving to the cloud can be cost-effective by reducing or eliminating upfront investments in hardware, software, and networking infrastructure. Additionally, IT teams increase their effectiveness by focusing on new and innovative aspects of technology. However, a less obvious contributor to cost-effectiveness is scalability. It’s not news that cloud vendors will happily scale compute and storage resources as needed, and that these resources may be less expensive in the cloud than on-premises. However, where does one draw the line between cost and benefit? Highly efficient, simple, and optimized IT solutions can effectively minimize costs as you scale, especially in a multi-cloud environment.

Cloud Scale and object storage background

As enterprises accelerate their move to a multi-cloud strategy, they are faced with the challenges of managing and protecting their data in a cost-effective, yet secure and compliant manner. The key to this strategy is the ability to scale while keeping costs in check. So, what is the approach by Veritas? Veritas recently launched a new generation architecture called Cloud Scale Technology. It reduces cloud computing costs by as much as 40% (compared to previous versions of NetBackup) by enabling NetBackup to operate at cloud scale and with cloud-native service elasticity (typically referred to as auto-scaling). Auto-scaling dynamically provisions additional compute resources to meet increased loads and then releases those resources when the load diminishes.

So if Cloud Scale Technology operates at web scale, a logical follow-up thought would be how scalable is this Cloud Scale Technology. We will try and discuss part of the answer here. Cloud Scale Technology in NetBackup spans both compute and storage. Other blogs and assets have discussed the compute portion of web-scale by focusing on auto-scaling and containerization. Today we will try and shed some light on the cloud storage part of the Cloud Scale Technology.

Before we dive deeper, let us quickly cover two basic types of storage available today on the cloud. The first is based on block storage. We commonly associate block storage with a filesystem, even though there are storage methods and applications which use block storage without a file system. The second is based on object-based storage. One of the fundamental differences between block storage and object storage is the complexity that is exposed to the storage user. In block storage, users manage directories and files, and when they write those files, the writes go through a series of transformations to get from being a file to being arranged into inode blocks that are fit for storage on a disk-based medium. Ultimately, the user needs to be aware of that filesystem and block storage, and manage it accordingly. This awareness applies even when using a cloud/virtual block device operating redundantly on top of Cloud Service Provider-managed storage. Object storage simplifies things by presenting an addressable storage target where users regard their files/data as objects, which is the end of the user’s responsibility. The actual backend storage is entirely abstracted by the object storage platform, allowing the storage consumer to focus on the actual work they need to get done.

Block-based and object-based storages both have their pros and cons. However, one of the areas where object storage beats block-based storage easily is the scalability in terms of size at a much lower cost. This blog from TechTarget discusses the comparisons and references object storage scalability in Yottabytes. So, what is, or how much is one Yottabyte? Simply put, it is one trillion Terabytes. While the current hardware in data centers may not come close to this storage scale, cloud storage may give that number a chase in the near future. After all, in the mid-1990s, 9GB hard disk drives or, HDDs, were considered high capacity. By 2007, the one TB drive became commercially available -- and today 24TB HDDs and 100TB SSDs are on the market, while 1-8 TB of capacity are accessible for desktops. At this accelerated pace for capacity and data growth, we could predict that enterprise applications will need or even demand enterprise cloud storage scalable to Yottabyte sooner than we may anticipate today.

Cloud storage – Cloud Scale strengths and impacts

So, are we implying that NetBackup Recovery Vault or NetBackup object-based MSDP (Deduplication pool) storage is scalable to one Yottabyte today? No, not yet. First, we like ourselves, and if we say yes, Veritas NetBackup engineers and architects will start giving us some very unpleasant calls. Second, no one can confidently say such a thing yet because the cost of testing infrastructure just to provision or subscribe to that amount of storage is exorbitant. However, we can do a theoretical exercise comparing some of the data protection technologies out there today with help from mathematics.

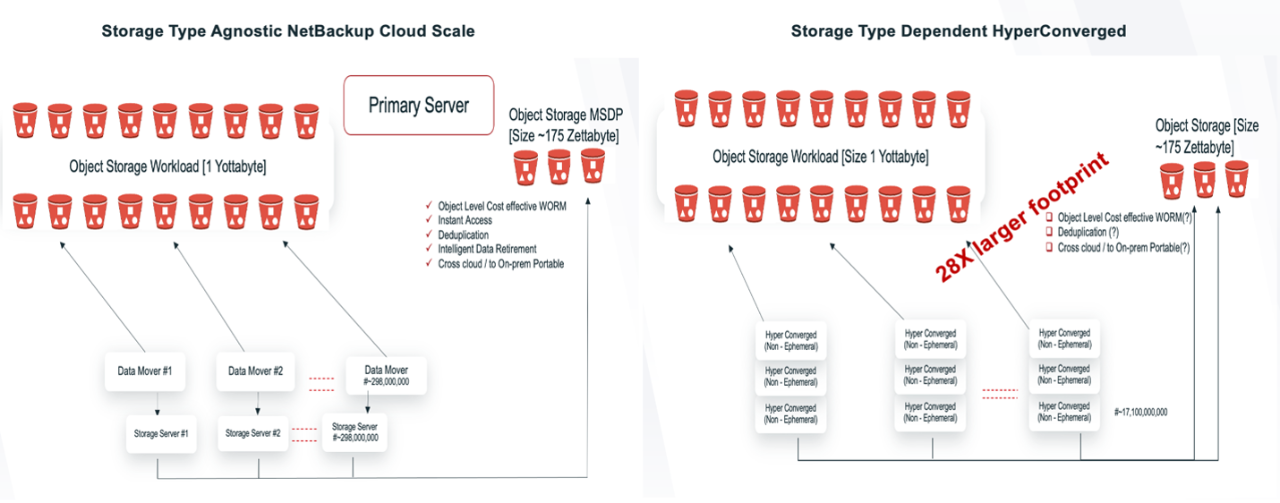

Current NetBackup object-based MSDP has a 2.4 PB per one deduplication pool limit on the capacity side. Note that from a functionality aspect, this object-based MSDP storage is at par with the block-based storage supporting immutability, deduplication, instant access, and universal shares. Based on the NetBackup Cloud Scale Technology, four data mover and storage server VMs can handle one such pool.

Assuming a very conservative deduplication ratio of 6:1, one Yottabyte of data will shrink to ~170 Zettabytes. Given what we have proposed till now, NetBackup will need approximately 598 million cloud VMs to support 1 Yottabyte of FETB using our NetBackup Cloud Scale Technology Object-based MSDP. Of course, this is not considering future cloud scale improvements. When implemented, the containerized temporary nature of the data movers and storage servers will allow for scale-down during idle periods. If 598 million Cloud VMs comes as a surprise, then let us walk you through another scenario.

Several hyper-converged design-based vendors entered the data protection market a few years back. Not going into the detail of how some of them were claiming to be born in the cloud, let us evaluate the scenario we are comparing today. One thing to clarify here is that we are comparing the ability to store primary backup copies on cloud object storage. This discussion does not accommodate any vendor’s or design’s long-term archiving capability of sending backups or snapshots to object storage. The comparison is purely about landing the backup data directly in object-based cloud storage with deduplication, without first storing it on block storage even for a day.

One of the vendors today has a much lower limit of a few TBs per hyperconverged cluster for storing backups on object-based storage. Ignoring the fact that those cluster nodes also need high-speed SSD block storage, mathematics tells us that even with the same deduplication ratio, the number of cloud virtual machines required to be provisioned will be roughly 17.1 billion. That is a whopping 28 times more than what NetBackup will need. This also translates to 28 times higher cost if not more. Also, note that this gap may widen as the efficiency and scalability improves in the upcoming releases.

This difference brings out a crucial advantage of NetBackup cloud scale technology. NetBackup MSDP is independent of underlying storage types whether block or object. Unlike some other technologies, the same NetBackup Cloud Scale code, or technology works seamlessly for both storage types across clouds without needing a different flavor or software version. This agnostic technology greatly relieves those large financials looking to build a cloud exit strategy. As a side note, it remains to be seen which other vendors can support instant access from object-based primary backup storage, not just for VMs, but for different workloads. It would be also helpful to mention that immutability does not triple the amount of required object storage, unlike some other competitors. While these aspects may seem less significant, they have a sizable impact on cost and risk. Hence, customers need to evaluate all technology choices more closely rather than falling for taglines such as ‘web scale’ or ‘born in the cloud’.

From a financial standpoint, block storage is at least 5 to 10 times costlier than object storage on today’s popular clouds. In addition, carbon footprint related to unnecessary data storage could affect financial statements as scope 2 and scope 3 emissions gain traction from a financial reporting perspective. Veritas Cloud Scale efficiency will become a decisive element in reducing carbon emissions.

While considering the object storage scalability you need today, ask yourself a simple question: Do you want to keep the backup of cost-effective object storage on not-so-cost-effective block storage?

We strongly encourage watching the Conquer Every Cloud event http://vrt.as/CEC to learn more.